Course

Since the launch of ChatGPT in late 2022 (the event that inaugurated the ongoing AI revolution), generative AI models have become more powerful and diverse. New models with varied sizes, features, modalities, and uses are reaching the market every day, making it difficult to ascertain the limits of the possible.

Overall, there is a clear trend towards developing bigger and more complex models. Increasing the number of parameters of the models and the amount of training data is believed to be the best strategy to improve performance in large language models (LLMs), the underlying technology behind generative AI models. Check out our separate article to know in detail what LLMs are.

However, given the rising concerns about the resources required to train and run these big models (as well as their associated environmental footprint), efficiency in AI development and deployment is rapidly gaining momentum.

This article will present Stable Code 3B, the latest model released by Stability AI, specifically designed for accurate and responsive coding. An extremely accurate model for coding tasks, the LLM provides levels of performance equal to state-of-the-art LLMs while considerably reducing its size.

What is Stable Code 3B?

Released in January 2024 by Stability AI, Stable Code 3B is a 3 billion parameter LLM developed for coding purposes. It’s an advanced version of its predecessor, Stable Code Alpha 3B.

Stable Code 3B excels in code completion tasks and can be used as an excellent educational tool for novel programmers. The model is released for free for research purposes or personal use. For commercial applications, users will need to subscribe to one of the Stability AI Memberships.

Stable Code 3B is one of the best products among LLMs of the same size (see more details on its performance metrics in the next section), achieving great results with a significantly lower size. Thanks to its compact design and efficiency, Stable Code 3B can operate offline in common laptops, even those without a dedicated GPU, such as a MacBook Air.

How does Stable Code 3B Work?

Stable Code 3B is a compact model with 2.7 billion parameters. It’s a decoder-only transformer similar to Meta’s open-source LLaMA. Check out our separate article to know all the details of LLaMA.

Stable Code 3B’s training process is clearly inspired by Meta's CodeLLaMA. The model departs from Stability AI’s pre-trained LLM, StableLM-3b-4e1t, which works as its foundation model.

This model followed a fine-tuning process that involved two steps. In the first one, the model was trained on multiple code and code-related datasets, including CommitPack, GitHub Issues, StarCoder & other Math datasets. The training data comprise code from 18 widely-used programming languages, including Python, R, Java, and C.

Then, the model was further fine-tuned with longer sequences of 16,384 tokens with the fill-in-the-middle techniques, as suggested in CodeLLama’s research paper. The second step allows the model to support long context windows up to 100k tokens. This is particularly appropriate for coding tasks. With the possibility of longer input sequences, developers can provide the model with more context and longer chunks of code from their codebase to generate more relevant and accurate outputs.

Stable Code 3B Performance Metrics

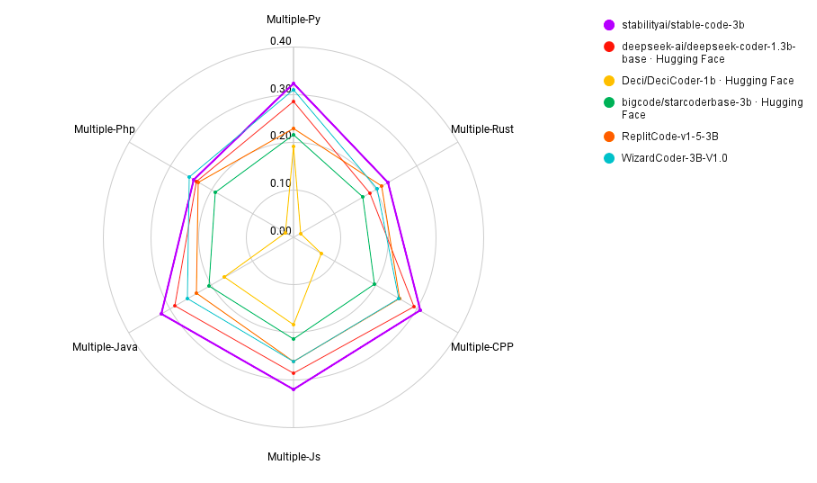

Stability AI’s developers have created a model that achieves state-of-the-art performance compared to models of similar size on the MultiPL-E metrics across multiple programming languages tested. The comparison is illustrated in the following image:

Multi-PL performance comparison of models under 3B. Source: Stability AI

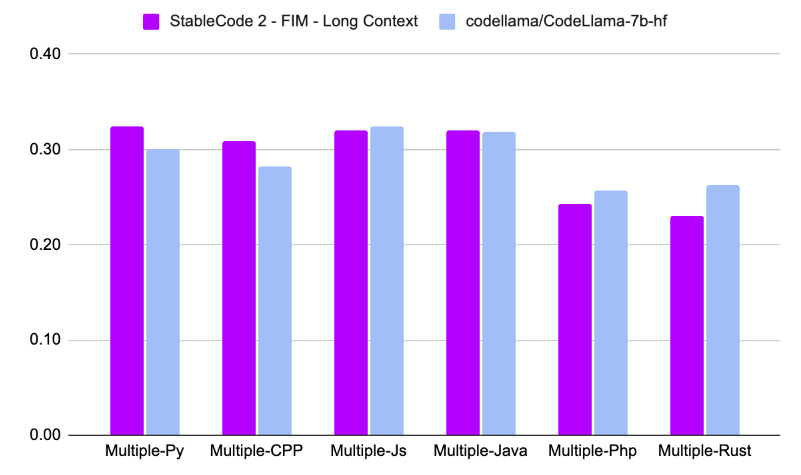

Stabe Code 3B also excels compared to significantly bigger models. For example, it achieves the same level of performance as CodeLLaMA 7b, its main inspiration, with a 60% model size reduction.

Comparison of Stable Code Completion 3B with CodeLLama 7B. Source: Stability AI

Stable Code 3B Applications

AI-powered coding assistants like Stable Code 3B offer many opportunities in software development and data analysis. Here is a list of some of the ways programmers are already using AI:

Task automation

Stable Code 3B can seamlessly automate repetitive and mundane tasks, such as performing basic SQL queries, performing data exploratory analysis, and streamlining data science projects. This can help programmers skip time-consuming tasks and focus on more complex and challenging work. To discover how this works in practice, check out our illustrative guide to using ChatGPT for data science.

Bug fixing

Debugging often takes considerable time and can be challenging, especially if working on complex projects with hundreds of lines of code. Stable Code 3B is the perfect assistant to speed up this process by scanning your code in seconds and providing code and structure suggestions to fix it. Thanks to its long context window, users can provide the model with large scripts and Stable Code 3B will find potential errors and pitfalls in your code.

Code optimization

When working on complex projects that require large amounts of computational resources, efficiency is a must. The way code is written can severely affect efficiency. Stable Code 3B can help rewrite your code in order to improve efficiency, thereby saving you time, resources, and money.

Code interpretability

Sometimes, understanding others’ code can be hard, especially for junior coders. Stable Code 3B not only can suggest code improvements but also provide detailed explanations on a certain piece of code, thereby helping you learn faster.

To learn how generative AI can be used for learning purposes, we highly recommend you to try DataCamp Workspace. We employ the GPT 3.5 and GPT-4 models to power our data science IDE and help you understand complex syntax. Check out our separate guide to get started in DataCamp Workspace.

How to Download the Stable Code 3B Model

The best way to get started in Stable Code 3B is through the HuggingFace API. You can easily import the model to your Python environment and start generating text using the following code:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("stabilityai/stable-code-3b", trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

"stabilityai/stable-code-3b",

trust_remote_code=True,

torch_dtype="auto",

)

model.cuda()

inputs = tokenizer("import torch\nimport torch.nn as nn", return_tensors="pt").to(model.device)

tokens = model.generate(

**inputs,

max_new_tokens=48,

temperature=0.2,

do_sample=True,

)

print(tokenizer.decode(tokens[0], skip_special_tokens=True))How to use Stable Code 3B for commercial applications

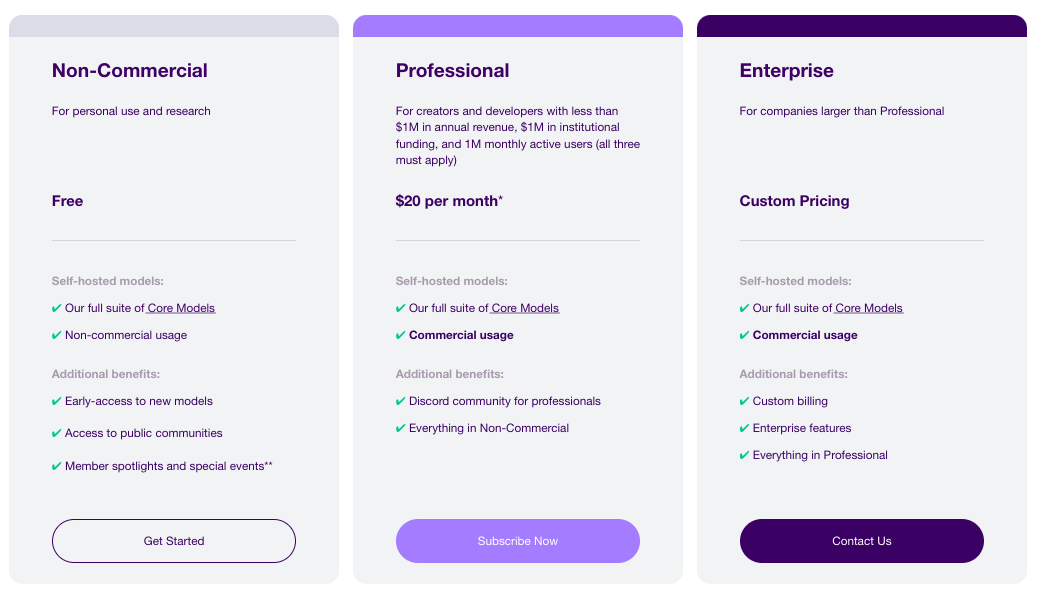

As already mentioned, Stable Code 3B is released for free for research purposes or personal use. For commercial applications, users must subscribe to one of the Stability AI Memberships.

Currently, Stability AI offers two types of memberships in addition to the non-commercial one, as illustrated in the following image:

The Professional membership costs $20 a month and provides users with access to and the right to commercially use the Stability AI Core Models, which include models for image, video, 3D, text, and code generation. The Enterprise membership is designed for large companies and comes with custom pricing and a set of enterprise features.

Learn More

We’re living in exciting times to be data professionals. The industry is on the brink of disruption following the massive adoption of generative AI tools like Stable Code 3B.

The best way to navigate the ongoing AI revolution is by staying tuned and updated. DataCamp gets you covered. Check out our dedicated articles covering other powerful generative AI tools:

I am a freelance data analyst, collaborating with companies and organisations worldwide in data science projects. I am also a data science instructor with 2+ experience. I regularly write data-science-related articles in English and Spanish, some of which have been published on established websites such as DataCamp, Towards Data Science and Analytics Vidhya As a data scientist with a background in political science and law, my goal is to work at the interplay of public policy, law and technology, leveraging the power of ideas to advance innovative solutions and narratives that can help us address urgent challenges, namely the climate crisis. I consider myself a self-taught person, a constant learner, and a firm supporter of multidisciplinary. It is never too late to learn new things.