Course

When working with OpenAI GPT models in Python, keeping an eye on the costs is crucial. That's where the tiktoken library comes in—it's a tool that makes it straightforward to estimate how much you'll spend on API calls by converting text to tokens, which are the basic building blocks GPT and other LLM’s use to understand and generate text.

Here, we’ll explore what tokenization is and why it's important for language models, introduce you to Byte Pair Encoding (BPE), and then dive into how the tiktoken library can help you predict GPT usage costs effectively.

What is Tokenization

Tokenization is a core process in the operation of GPT or other language models, serving as the first step in translating natural language into a format that these advanced AI systems can understand and process.

At its core, tokenization involves breaking down text into smaller, manageable pieces called tokens. These tokens can be words, parts of words, or even individual characters, depending on the tokenization method used.

Effective tokenization is crucial as it directly influences the model's ability to interpret input text, generate coherent and contextually appropriate responses, and estimate computational costs accurately.

Byte Pair Encoding (BPE)

Byte Pair Encoding (BPE) is a specific tokenization method that has gained prominence for its use in GPT models and other natural language processing (NLP) applications. BPE strikes a balance between the granularity of character-level tokenization and the broader scope of word-level tokenization.

It operates by iteratively merging the most frequently occurring pair of bytes (or characters) in the text into a single, new token. This process continues for a predefined number of iterations or until a desired vocabulary size is achieved.

The significance of BPE in LLM’s cannot be overstated. BPE allows these models to efficiently handle a vast range of vocabulary, including common words, rare words, and even neologisms, without needing an excessively large and unwieldy fixed vocabulary.

This adaptability is achieved by breaking down less common words into sub-words or characters likely to appear in other contexts, thus allowing the model to piece together the meaning of unfamiliar terms from known components.

Some of the key characteristics of BPE are:

- It's reversible and ensures no information is lost, allowing the original text to be perfectly reconstructed from tokens. For example, if the word “exciting” converts into [12 34 39 10 23], then this vector can be inverted back to the word “exciting” without any loss.

- BPE can handle any text, even if it wasn't included in the model's initial training set, making it incredibly versatile.

- This method effectively compresses text, with the tokenized version generally being shorter than the original text. On average, each token represents about four bytes of the original text.

- BPE also helps models recognize and understand common word parts. For example, "ing" is frequently seen in English words, so BPE will divide "exciting" into "excit" and "ing." This recurring exposure to common subwords like "ing" across various contexts enhances the model's ability to grasp grammar and generalize language patterns.

tiktoken

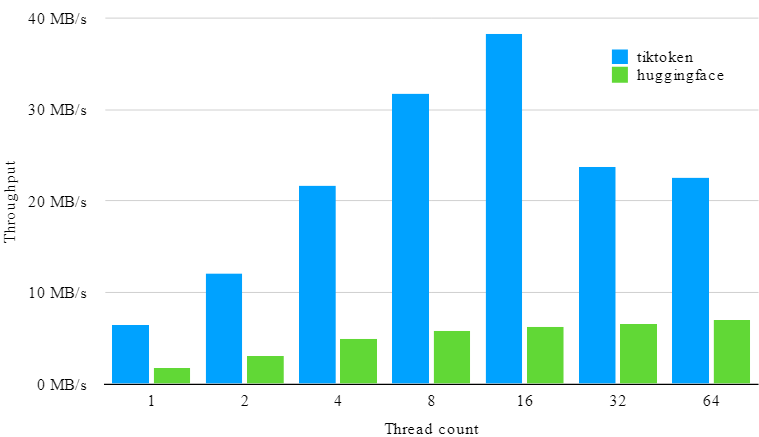

tiktoken is the fast BPE algorithm developed by OpenAI. The open-source version of the algorithm is available in many libraries, including Python. As per their GitHub, tiktoken is 3-6x faster than a comparable open-source tokenizer.

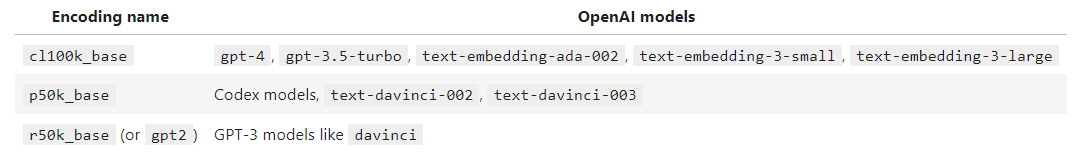

The tiktoken library supports three different encoding methods. Encodings determine the method by which text is transformed into tokens. Different models use different encodings.

Estimating The Cost of GPT Using the tiktoken Library in Python

tiktoken library can encode text strings into tokens, and since we know the encoding name for the model we are using, we can use this library to estimate the cost of API calls before making the call.

Step 1. Install tiktoken

!pip install openai tiktokenStep 2. Load an encoding

tiktoken.get_encoding method returns the relevant encoding

encoding = tiktoken.get_encoding("cl100k_base")

encoding

Output:

>>> <Encoding 'cl100k_base'>

Alternatively, you can also load it from the model name by using the tiktoken.encoding_for_model method.

encoding = tiktoken.encoding_for_model("gpt-4")Step 3. Turn text into tokens

All you need to do now is just pass the string into the encode method.

encoding.encode("This blog shows how to use tiktoken for estimating cost of GPT models")Output:

>>> [2028, 5117, 5039, 1268, 311, 1005, 73842, 5963, 369, 77472, 2853, 315, 480, 2898, 4211]

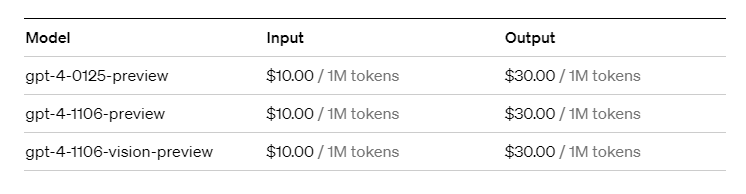

You can simply call the length method to find out how many tokens are in this string. Pricing for this model can be found on OpenAI website.

In this example, we have 15 input tokens. The price of GPT-4 at $10/1M input tokens means our cost for this query will be $0.00015 ($10 / 1,000,000 * 15).

Since the BPE algorithm is fully reversible, you can use the decode method to go back from token to text string.

encoding.decode([2028, 5117, 5039, 1268, 311, 1005, 73842, 5963, 369, 77472, 2853, 315, 480, 2898, 4211])Output:

>>> ‘This blog shows how to use tiktoken for estimating cost of GPT models’

Conclusion

Navigating the cost of using GPT models in Python doesn't have to be a guessing game, thanks to the tiktoken library. By understanding tokenization, particularly the Byte Pair Encoding (BPE) method, and leveraging tiktoken, you can accurately estimate the costs associated with your GPT API calls. This guide has walked you through the essentials of tokenization, the efficiency of BPE, and the straightforward process of using tiktoken to turn text into tokens—and back again—while keeping a keen eye on your budget.

Whether you're optimizing for cost, testing out new ideas, or scaling your applications, having this knowledge and tool at your disposal ensures that you're always informed and in control of your GPT usage costs. Remember, managing computational expenses is as crucial as the innovative work you're doing with GPT models. With tiktoken, you're well-equipped to make every token—and dollar—count.

If you are interested in doing a deep dive into embeddings and unlocking more advanced AI applications like semantic search and recommending engines, check out DataCamp’s Introduction to Embeddings with the OpenAI API.

If you are a beginner and want to get a hands-on, step-by-step guide to the OpenAI API, check out our Working With The OpenAI API course and our A Beginner's Guide to The OpenAI API: Hands-On Tutorial and Best Practices on Datacamp.