Track

I was introduced to video generation models when OpenAI launched the first iteration of Sora. From that moment, I began to encounter many top-of-the-line video generation models. This inspired me to compile a list of the best models available. The rankings are based on my personal experience, the Artificial Analysis video generation leaderboard, and overall user feedback.

Video generation is similar to image generation, but with an additional constraint: time. Each frame must stay consistent with prior frames, preserving character identity, lighting, camera motion, and scene layout, to maintain temporal coherence.

Modern systems go beyond text-to-video: you can animate a single image in your own style, stitch shots into longer sequences, and even generate synchronized audio (music, SFX, dialogue) from prompts, producing end-to-end cinematic clips.

In this blog, we will explore some of the top-rated video generation models that are transforming the worlds of marketing, cinema, advertising, and content creation, opening new creative possibilities across industries.

Image by Author | Canva + Google Nano Banana

What are Video Generation Models?

Video generation models are AI systems that create moving images from inputs such as text, images, or existing videos. They build upon text-to-image methods by incorporating the element of time. In addition to ensuring realism and adherence to prompts, these models must also maintain smooth motion, continuity of subjects, and coherence from frame to frame.

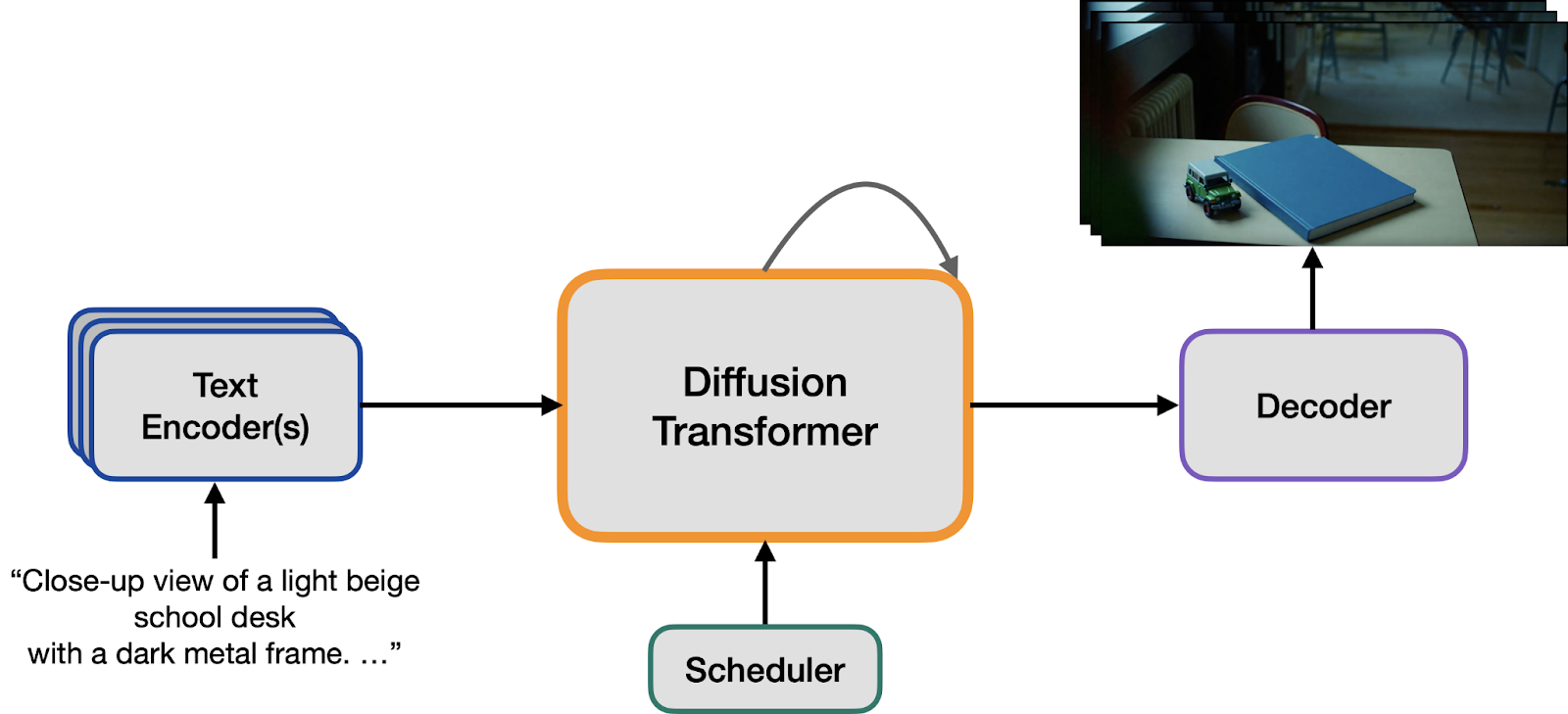

Image from State of open video generation models in Diffusers

A video generation model works by turning a text prompt into a structured representation using a text encoder, then starting from random noise and refining it step by step through a denoising network. A scheduler guides this process, while encoders and decoders move between pixel space and a compressed latent space for efficiency. Unlike image models, video models process 3D tokens that capture both spatial detail and temporal motion. Because decoding requires a lot of memory, many pipelines use frame-by-frame decoding to make generation more efficient.

1. Veo 3

Veo 3 is Google’s state-of-the-art video generation model, producing high-fidelity, 8-second clips at 720p or 1080p (16:9) with native, always-on audio at 24fps. Available via the Gemini API, it excels across dialogue-driven scenes, cinematic realism, and creative animation, capturing quoted dialogue, explicit sound effects, and ambient soundscapes directly from your prompt.

Veo 3 translates text prompts into cinematic shots with coherent lighting, depth-of-field, and filmic color, while maintaining temporal consistency frame to frame. It natively renders synchronized dialogue, SFX, and ambience, enabling lip-aware speech, scene-matched acoustics, and timing-accurate cues that elevate realism.

2. Sora 2

Sora 2 is a versatile text-to-video system that now generates synchronized audio alongside visuals, including dialogue, ambient sounds, and sound effects, all in one pass. This advancement bridges a significant gap in previous workflows and allows for more cohesive storytelling across multiple shots.

In addition to audio, the model focuses on creating more realistic scenes, improving physical plausibility (such as weight, balance, object permanence, and cause-and-effect relationships), and enhancing continuity across multiple shots (ensuring consistent characters, lighting, and overall world state). It also provides flexible style options, accommodating photorealistic, cinematic, and animated aesthetics.

Furthermore, Sora 2 introduces a sophisticated capability to simulate failures, such as missed jumps or slips, which is valuable for previsualization and safety-related concepts.

3. PixVerse V5

PixVerse V5 is a major upgrade over V4.5, pairing faster text-to-video and image-to-video generation with sharper and cinematic visuals. It delivers smooth, expressive motion, stable style and color, and strong prompt adherence so your direction translates cleanly on screen.

PixVerse V5 focuses on realism through three pillars: motion, consistency, and detail. Its smoother camera moves and natural, weighty animations reduce the stiffness seen in earlier versions, while temporal consistency keeps style, color, and subjects coherent across frames for a cohesive, film-like flow. The result is crisp, cinematic imagery that many creators describe as “film‑worthy,” with reliable prompt following for style, tone, and subject.

4. Kling 2.5 Turbo

Kling 2.5 Turbo is the newest upgrade in Kling’s AI video generation suite, built for next‑level speed and creative freedom. It advances both text‑to‑video and image‑to‑video with stronger prompt adherence, advanced camera control, and physics‑aware realism, so your direction translates into cinematic results with less iteration and lag.

Kling 2.5 Turbo focuses on film-grade aesthetics, featuring sharper frames, balanced lighting, and rich color depth, which gives scenes an intentional and cinematic quality right from the start. Its improved prompt adherence and camera control accurately translate detailed scripts into precise visuals, smoothly executing pans, zooms, and transitions in a way that feels professionally crafted.

Realism is enhanced by physics-aware motion, incorporating elements like gravity and impacts alongside fluid-like movements. This is further complemented by more lifelike character expressions, making the action and performances appear credible on screen.

5. Hailuo 02

The MiniMax Hailuo 02 is a next-generation video generation model designed for native 1080p output. It features state-of-the-art instruction following and exceptional proficiency in physics. Powered by a new architecture called Noise-Aware Compute Redistribution (NCR), it achieves approximately 2.5 times the efficiency at similar parameter scales.

This advancement enables a model that is three times larger and trained on four times more data than its predecessor, all while maintaining unchanged creator costs. The result is a faster, more capable system that accurately interprets complex prompts and generates high-fidelity motion

The Hailuo 02 excels in creating scenes that require realistic physics and precise control. For instance, it can handle gymnastics-level choreography where body movements, weight distribution, and timing must all feel authentic. Its enhanced training scale and NCR efficiency result in clearer frames, stable temporal consistency, and high accuracy in following prompts, ensuring that intricate instructions are performed on screen with minimal drift.

6. Seedance 1.0

Seedance 1.0 is ByteDance’s latest high-quality video generation model, designed to create smooth and stable motion while incorporating native multi-shot storytelling. It effectively handles both text-to-video (T2V) and image-to-video (I2V) workflows.

This model features a wide dynamic range, allowing for fluid, large-scale movements while maintaining stability and physical realism. It can capture everything from subtle expressions to highly active scenes.

In addition to its motion capabilities, Seedance 1.0 supports the creation of multi-shot narrative videos, ensuring consistency in the main subject, style, and overall atmosphere during shot transitions and changes in space and time. This consistency is essential for cohesive storytelling and efficient production workflows.

Seedance 1.0 also offers diverse stylistic expression and precise control. It can manage multi-agent interactions, complex action sequences, and dynamic camera movements while accurately following detailed prompts. This enables a faithful translation of text into cinematic video. Furthermore, it supports high-definition outputs, including 1080p, ensuring smooth motion and strong visual detail that contribute to a polished, film-like appearance in both T2V and I2V tasks.

7. Wan2.2

Wan-AI/Wan2.2 is an open-source and advanced large-scale video generative model. Building on Wan 2.1, it features a Mixture-of-Experts (MoE) diffusion architecture that efficiently routes specialized experts across denoising timesteps, allowing for expanded capacity without increasing computational demands.

The best part is that it is completely open, meaning the Wan-AI team has released code and weights for practical use, including a 5B hybrid TI2V model with a high-compression VAE (16×16×4) that supports 720p at 24fps for both text-to-video and image-to-video tasks on consumer GPUs (e.g., the 4090). There are also A14B T2V/I2V models available for 480p and 720p outputs.

Wan2.2 creates videos with cinematic control and complex, believable motion. The MoE design allocates a high-noise expert for the early global layout and a low-noise expert for the detailed late stages. This combination yields clean compositions, crisp textures, and stable temporal coherence that appear realistic on screen. The curated aesthetic labels enable precise and controlled visual styles, encompassing lighting, color grading, and framing.

8. Mochi 1

genmo/mochi-1 is an advanced, open-source video generation model that showcases high-fidelity motion and strong adherence to prompts in initial evaluations, significantly reducing the gap between closed systems and open ones.

The Mochi 1 excels at converting natural language prompts into coherent, cinematic motion, effectively capturing the user’s intent. The resulting videos are polished, deliberate, and true to the descriptions provided. By bridging the quality gap with leading closed models while remaining fully open and Apache-licensed, Mochi 1 enables researchers, creators, and developers to experiment, fine-tune, and integrate cutting-edge text-to-video technology into real projects without heavy constraints or cost barriers.

9. LTX-Video

LTX-Video is Lightricks' diffusion-trained (DiT) video generation system, known for delivering high-quality videos in real time. It produces 30 frames per second (FPS) at a resolution of 1216×704, faster than playback speed. Trained on a large and diverse video dataset, LTX‑Video focuses on converting images into videos with optional conditioning using images and short clips.

It offers a variety of models to balance quality and cost: a 13 billion-parameter model for the highest fidelity, distilled and FP8 variants for lower VRAM usage and faster processing, and a 2 billion-parameter option for lightweight deployments. The result is a practical, creator-friendly system that combines speed with realistic, high-resolution visuals.

LTX‑Video transforms still images into smooth, coherent motion at 30 FPS, featuring crisp textures, stable subjects, and believable camera dynamics, making the results feel intentionally filmed rather than synthesized. Its DiT architecture and expansive training contribute to temporal consistency and varied, lifelike content, while detailed prompts in English provide you with precise stylistic control.

10. Marey

Marey is the foundational AI video model of Moonvalley, specifically designed to meet the standards of world-class cinematography. It is tailored for filmmakers who require precision in every frame, emphasizing control, consistency, and fidelity. This ensures that your creative vision is faithfully realized from concept to final cut. From look development to editing, Marey integrates seamlessly into professional workflows and delivers high-quality images that withstand close examination.

Marey transforms detailed directions into precise, production-ready sequences, ensuring stable subjects, consistent lighting, and smooth motion for a cinematic quality. By emphasizing frame-level control and temporal consistency, it helps maintain the tone, style, and pacing throughout different shots. This enables creators to confidently produce trailers, scenes, and campaigns.

Conclusion

Video generation models are advancing rapidly, and creators in advertising, e-commerce, marketing, cinema, YouTube, and short-form storytelling are already putting them to use. The benefits are substantial: faster iteration, cinematic quality, and new creative possibilities that were once out of reach. However, the realism that these systems achieve also presents real risks, such as misleading advertisements, product scams, and hyper-convincing deepfakes of public figures.

As we embrace these tools, it is essential to pair innovation with responsibility. This means being transparent about the use of AI, verifying sources, and adhering to clear ethical and legal guidelines.

In this blog, we have explored ten leading video models that convert text and images into highly realistic footage, accompanied by YouTube demos to showcase each model’s strengths. I have also highlighted open-source options that you can run locally, which are excellent for learning, experimentation, and fine-tuning results to suit your brand or story.

As a certified data scientist, I am passionate about leveraging cutting-edge technology to create innovative machine learning applications. With a strong background in speech recognition, data analysis and reporting, MLOps, conversational AI, and NLP, I have honed my skills in developing intelligent systems that can make a real impact. In addition to my technical expertise, I am also a skilled communicator with a talent for distilling complex concepts into clear and concise language. As a result, I have become a sought-after blogger on data science, sharing my insights and experiences with a growing community of fellow data professionals. Currently, I am focusing on content creation and editing, working with large language models to develop powerful and engaging content that can help businesses and individuals alike make the most of their data.