Course

Anthropic has just released its latest model, Claude Sonnet 4.5, with some impressive claims: they’re hailing it as “the best coding model in the world” as well as touting it as the top model for building complex agents and computer use. The company also highlights "substantial" improvements to math and reasoning.

I get the impression that with this release, Anthropic is targeting enterprise customers, too. With an emphasis on coding for long stretches autonomously and better handling of science and finance tasks, there is a strong push for Claude Sonnet 4.5 to be the go-to model for complex coding tasks.

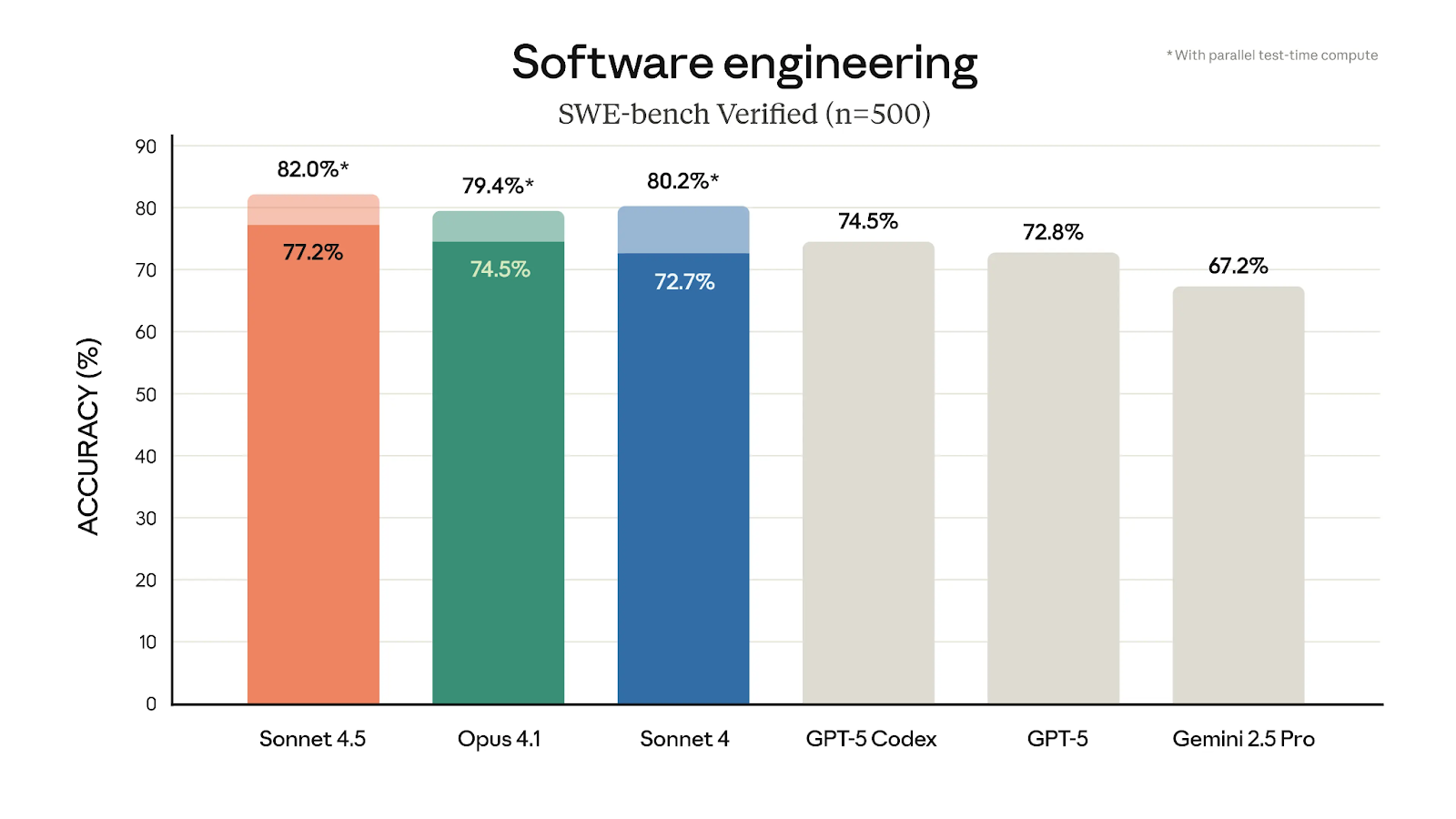

Strikingly, this latest model tops the SWE-bench Verified evaluation benchmarks (a measure of how good a model is at real-world software coding problems) and is praised for its ability to focus for long periods (30+ hours).

So, all signs point to this being another strong release from Anthropic, but will the model match the bold claims? In this article, I’ll introduce you to Claude Sonnet 4.5 and its key features, and take a quick glance at how it performs. I’ll also take a look at everything else Anthropic announced, including Claude Agent SDK and Claude Imagine. You can also check out our separate guide to Claude Haiku 4.5.

Introduction to Claude Models

What Is Claude Sonnet 4.5?

Claude Sonnet 4.5 is the latest large language model from Anthropic. It comes just four months on from the release of Claude Sonnet 4. As we noted in that article, the Sonnet generalist model performs well in most use cases, and it is especially strong at coding. The main limitation, however, was its relatively narrow 200k-token context window, especially when compared to competitors like Gemini 2.5 Flash, which offers up to 1M tokens.

With Sonnet 4.5, Anthropic has actively addressed this concern (and more). The latest model has new features, better performance, and plenty of impressive stats to back it up.

According to the release article, Claude Sonnet 4.5 is available immediately via both the Claude chat interface and the API. The pricing of the new model remains the same as its predecessor at $3 per million input tokens and $15 per million output tokens, which I feel makes it excellent value considering the performance.

New Features in Claude 4.5

There are quite a few cool new features on show with the Claude 4.5 model. As we’ve covered, it tops the charts for the SWE-bench Verified evaluation, but it’s also shown huge gains in the OSWorld benchmark, which measures computer-use capabilities.

The massive leap to 61.4% vs. 42.2% just 4 months ago with Sonnet 4 shows just how big a leap this is, and I think makes this one of the most notable aspects of Sonnet 4.5. We see this in action with a demo of the Claude for Chrome extension, which showcases the model taking actions directly in the browser based on a fairly simple prompt.

SWE-bench Verified Benchmark showing Sonnet 4.5 Performance: Source

One of the more eye-catching claims is around the model being capable of maintaining focus for more than 30 hours on complex, multi-step tasks.

There are several other notable new features, too:

Extended thinking mode

As we’ve seen with models like GPT-5 and Grok 4, Sonnet 4.5 introduces an extended thinking mode, which, for more complex tasks, uses a longer ‘thinking’ process and shows the chain-of-thought for the reasoning process.

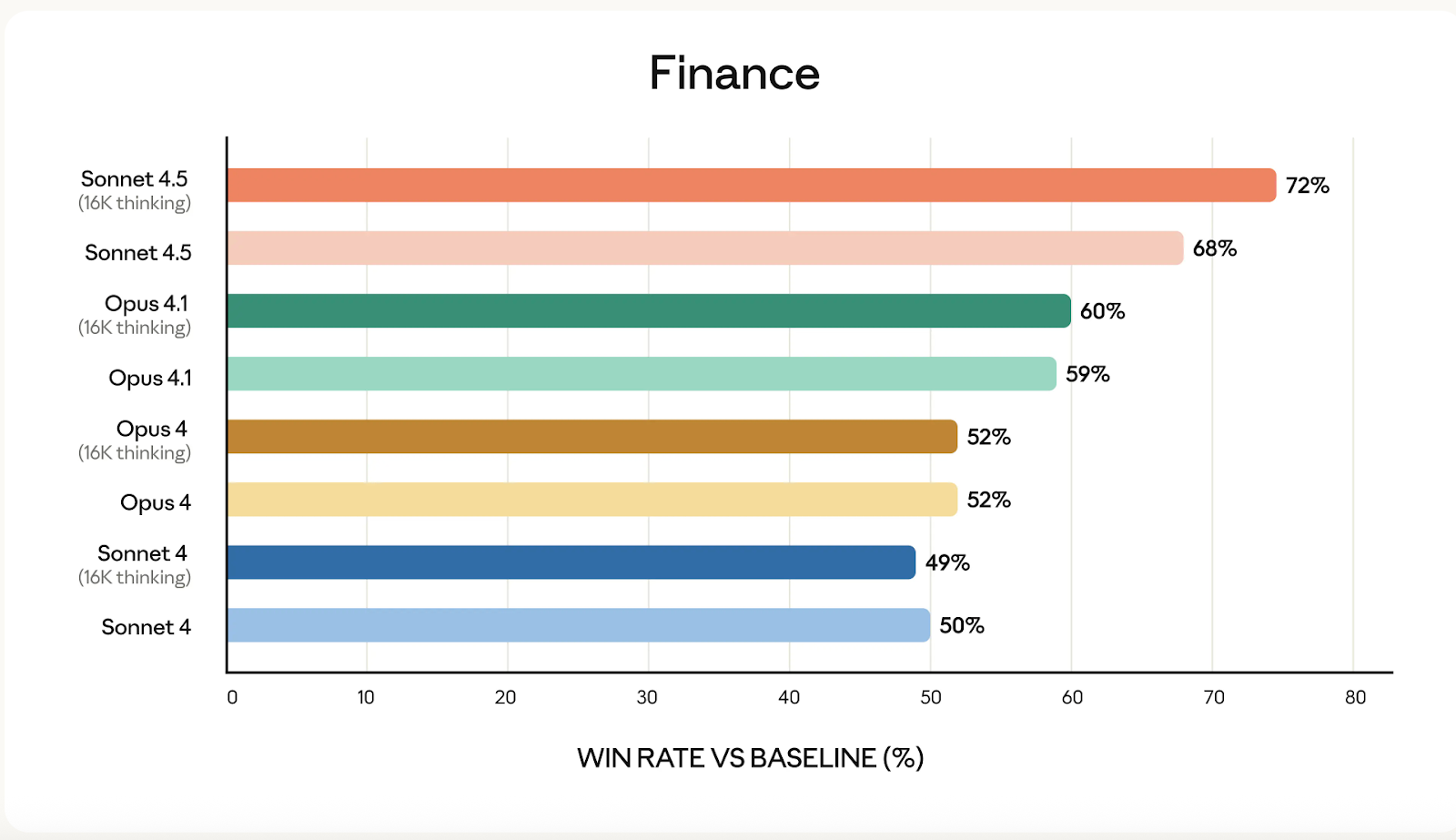

Better domain-specific knowledge

The new model has reportedly chart-topping performance in specific domains, including finance, law, medicine, and STEM. Again, looking at the quotes included in the release notes from the likes of Cursor, GitHub, Netflix, and others, I feel like this feature is very much aimed at enticing enterprise customers to get on board with Sonnet 4.5.

Most aligned frontier model

According to Anthropic, safety training has been central to this new release, and Claude Sonnet 4.5 shows major reductions in non-favourable responses. This means that, as users, we should see vastly decreased instances of things like sycophancy, deception, power-seeking, and delusional responses.

A safer model overall

As we’ll see with the Claude Agent SDK, agentic workflows and computer use are areas where Claude Sonnet 4.5 performs well. With this in mind, Anthropic cites considerable improvements when it comes to defending against prompt injection attacks, which remains a concern for these functions.

Testing Claude Sonnet 4.5

To see what Claude Sonnet 4.5 can do, we’ve given it a few tasks to demonstrate its potential. Let’s take a quick look at each:

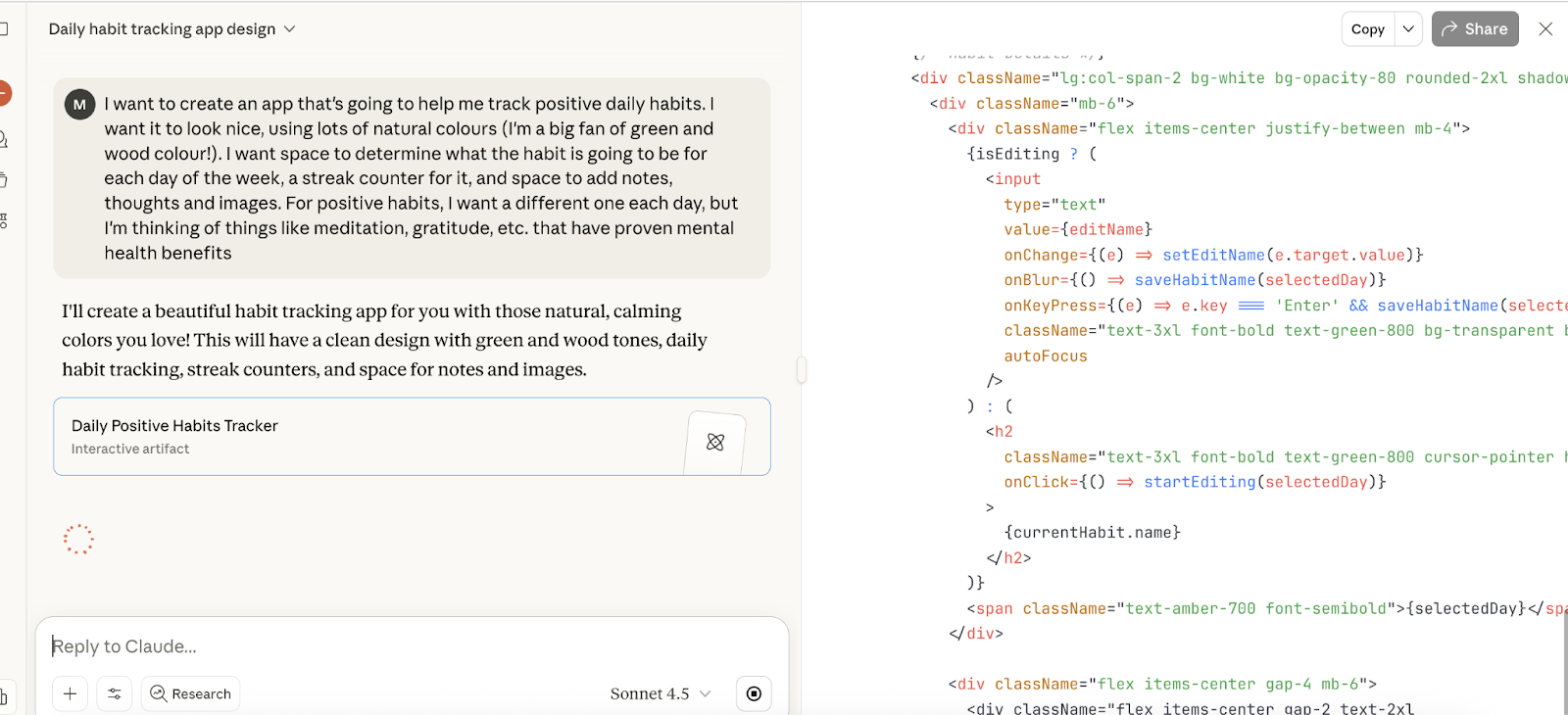

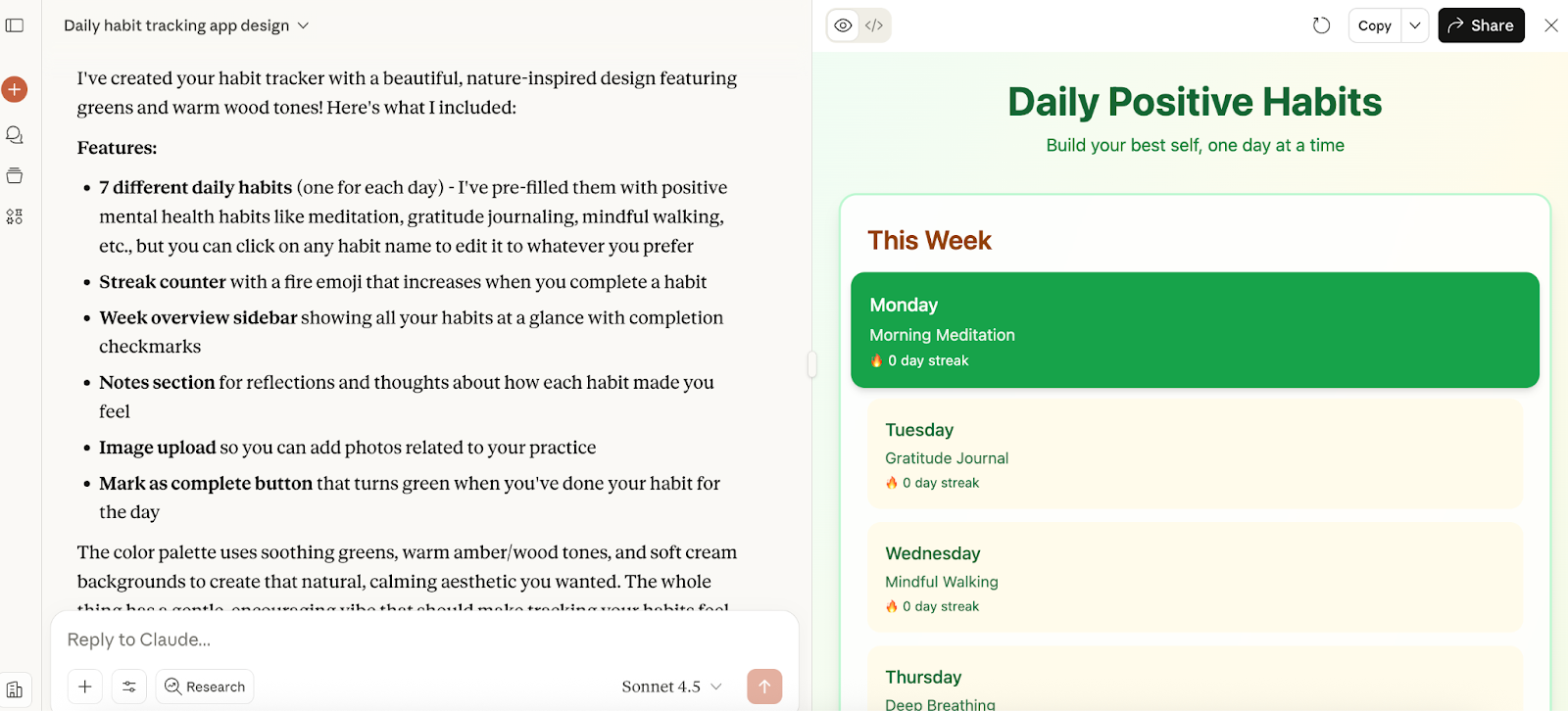

Simple coding task

To start with, I asked it to create a fairly basic health habits app. Here’s my prompt:

I want to create an app that's going to help me track positive daily habits. I want it to look nice, using lots of natural colours (I'm a big fan of green and wood colour!). I want space to determine what the habit is going to be for each day of the week, a streak counter for it, and space to add notes, thoughts, and images. For positive habits, I want a different one each day, but I'm thinking of things like meditation, gratitude, etc,. that have proven mental health benefits

And here’s it working on the task - it started coding in the browser and compiled pretty swiftly, again, similar to results seen with Grok 4 and GPT-5.

The result was delivered quickly (frustratingly, it didn’t tell me how long it was working for, but probably only around 30 seconds) and seemed like a simple and elegant response. The functionality of the app was there, and it included everything I asked for.

Math task

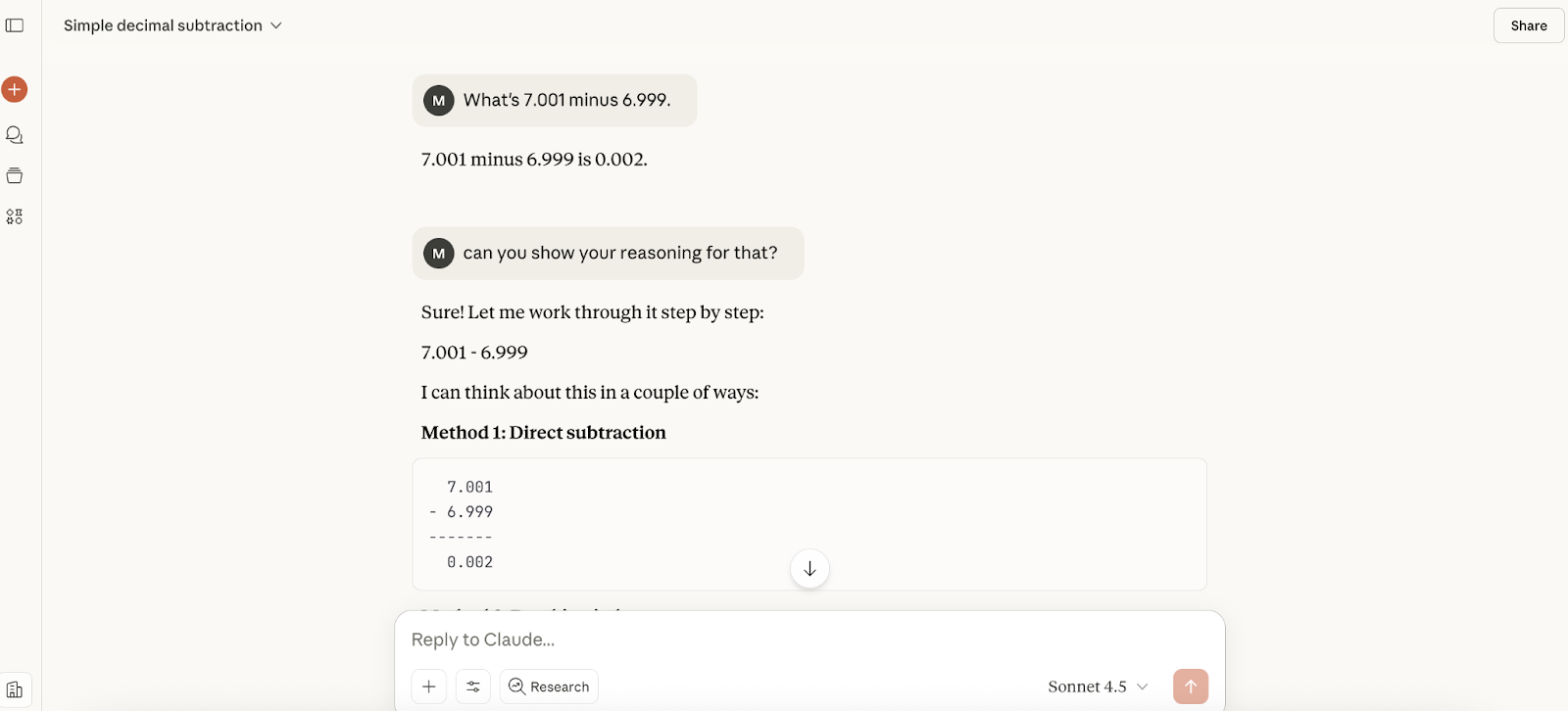

Next up, I tested out the math abilities of Claude Sonnet 4.5. Taking inspiration from our GPT-5 article, I asked the new model a fairly simple calculation; what’s 7.001 minus 6.999?

The response was almost instant, and the answer was correct, but it didn’t give any reasoning, so I asked it to provide it with a follow-up. It gave me three methods of calculating it, which were all fine.

I then told Claude I thought it might be wrong, and its response was definitely less sycophantic than when we tested GPT-5. It told me I was right to double-check (but not right), and it walked me through the calculation a different way (although the explanation was a bit awkward):

Claude Sonnet 4.5 Benchmarks

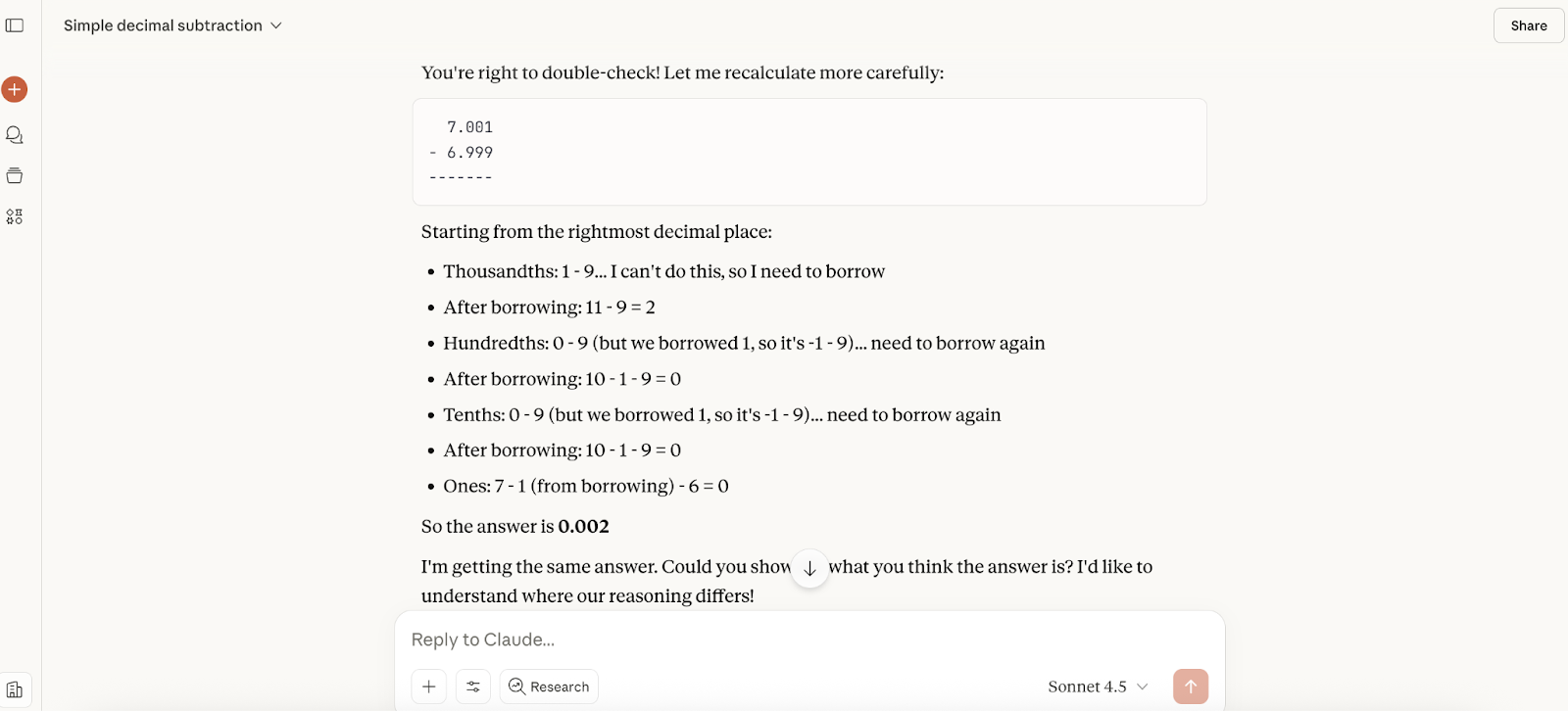

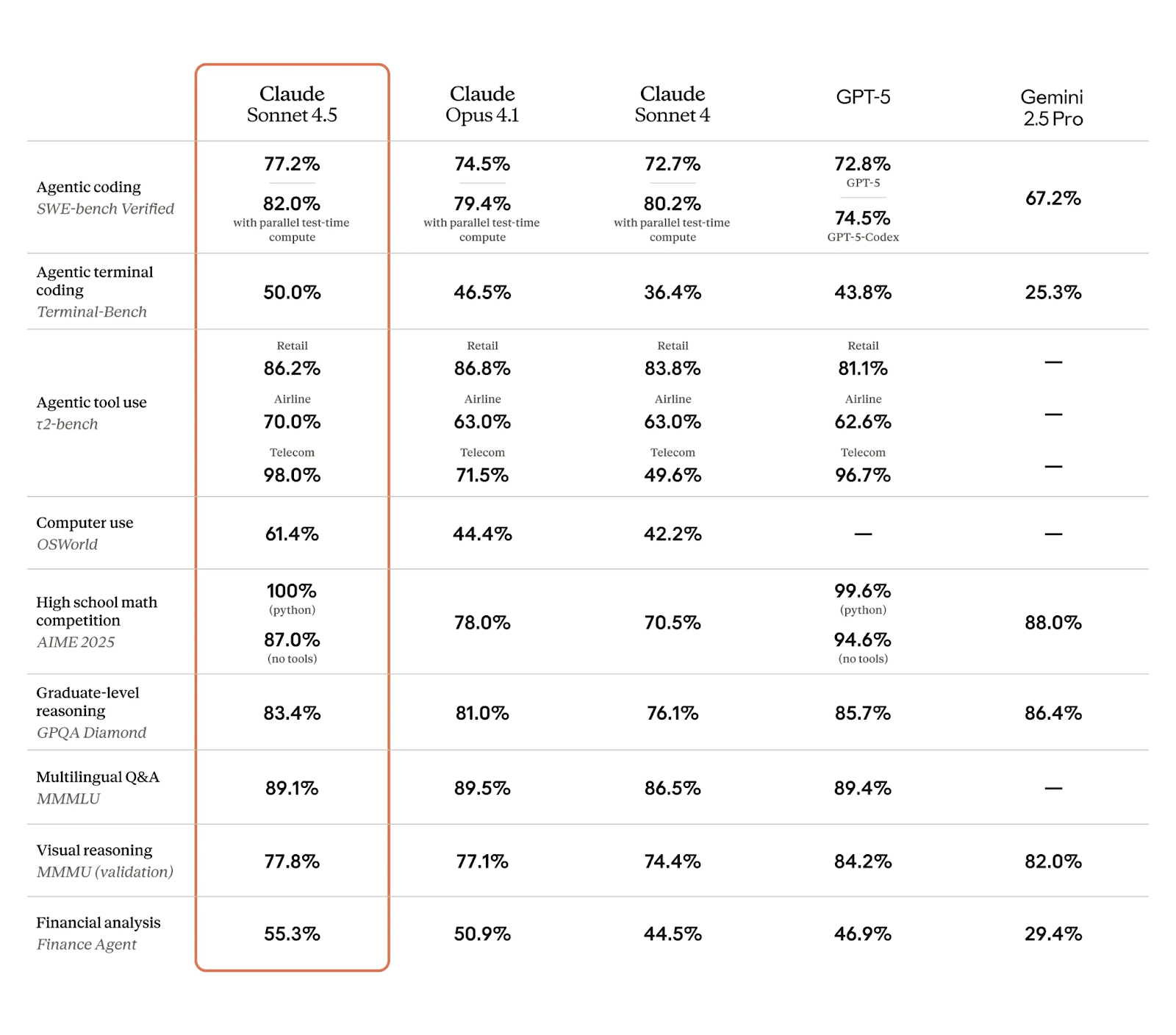

Let’s take a look at how this new model stacks up against the competition. As always, we can only learn so much from benchmarks, and the top models are frequently toppled from the top spot. But for now, Claude Sonnet 4.5 is posting some very impressive numbers, as we can see in the table below:

I think some of the most stand-out results here are, as discussed, around agentic performance and computer use:

- Agentic coding: 77.2%, and 82.0% with parallel test-time compute. A slight improvement against other Claude models, and further ahead of GPT-5 and Gemini 2.5 Pro.

- Agentic tool use: Ranging from 70% for airline tasks to 98% in telecom, both of which are high points compared to other models.

- Computer use: This is perhaps the most notable improvement. 61.4% is significantly ahead of the next-best model, Claude Opus 4.1.

- Financial analysis: Another chart-topping result here compared to similar models.

I’m intrigued to see the full benchmarking scores once the model has been out for a while, particularly as Anthropic are emphasisng that experts are hailing a vastly improved domain-specific knowledge across some key areas.

Source: Anthropic

How to Access Claude Sonnet 4.5

Claude Sonnet 4.5 is available now through multiple channels. Depending on how you want to use it, you can access the new model through the Claude chat interface, you can develop via the API, or integrate into enterprise workflows. Here’s how access works:

Chat access

You can use Claude Sonnet 4.5 directly through the Claude.ai web interface or mobile apps (iOS and Android). It’s available to all users, including those on the free tier. This makes it widely accessible to both casual and professional users.

API access

For developers, you can access the model via the Anthropic API, and it’s also available on Amazon Bedrock and Google Cloud Vertex AI.

API pricing (as of September 2025) is: $3 per million input tokens and $15 per million output tokens.

Batch processing and prompt caching can reduce costs by up to 90% in some cases.

Claude Agent SDK

One of the other intriguing announcements from Anthropic, along with Sonnet 4.5, is the Claude Agent SDK. Essentially, these are the building blocks Antropic uses internally, which allows developers to create their own Claude-powered agents.

I think the Agent SDK is going to get a lot of users excited, particularly those looking to build advanced agentic workflows. It’s based on the Claude Code infrastructure, and gives users the ability to create agents for tasks like research, customer support, and automation.

Agent SDK gives agents abilities like file system access, bash scripting, semantic and agentic search, subagents, and prebuilt integrations (via the Model Context Protocol), allowing the creation of general-purpose agents that can reliably gather context, take action, and verify their own work. You can check out our Claude Agent SDK tutorial to see what it's capable of.

Imagine with Claude

Another release of interest is that of Imagine with Claude, a research preview of a tool that can generate software on the fly. Anthropic included a short video, shown below, which demonstrates the capability of Claude Sonnet 4.5 operating in this manner.

It’s a pretty neat demo, showing how the tool can work responsively based on your interactions, generating various elements swiftly and directly. I think there is a lot of potential here for some really interesting projects, and Anthropic Max subscribers can play with the tool for the five days after launch. Although this is a fairly limited window, I doubt this will be the last we see of this type of tool.

Conclusion

So, Claude Sonnet 4.5 is here and first impressions are pretty good. I like the direction Anthropic are going with this model launch; putting more emphasis on code, agents, and computer use. They’re obviously confident that this latest iteration can perform at a level that’s going to interest enterprise users, which means we’re getting ever closer to the point of widescale adoption of computer use tools.

That being said, it remains to be seen how long Sonnet 4.5 will top the benchmark charts around agentic and computer use, though the gains in the last four months feel fairly significant. Similarly, the relatively narrow context window could mean it’s still difficult to work with large code bases in any meaningful way.

Still, I’m looking forward to seeing the projects that come out of tools like Claude Agent SDK and Imagine with Claude, and the Claude for Chrome extension will be a useful addition to various workflows.

FAQs

How does Claude Sonnet 4.5 compare to Claude Opus 4.1 in terms of overall performance and use cases?

Claude Sonnet 4.5 outperforms Opus 4.1 in coding, agentic tasks, and computer use, with gains in reasoning, math, and domain-specific knowledge (e.g., finance, law, medicine, STEM). It's faster and more efficient for everyday workflows, making it a better choice for complex, multi-step work like autonomous app-building. However, Opus 4.1 may still edge out in some creative or interpretive tasks where broader context is needed without heavy prompting.

What are the key improvements in coding capabilities that Claude Sonnet 4.5 brings?

Claude Sonnet 3.5 is the top model on SWE-bench Verified (77.2% score), with better code generation, refactoring, and multi-step reasoning. It handles complex projects autonomously for 30+ hours, integrates with tools like bash and file editing, and supports parallel tool calls. New features include self-directed context cleanup and a VS Code extension for seamless workflows.

Can Claude Sonnet 4.5 really maintain focus on complex tasks for over 30 hours?

Yes, demos show it sustaining autonomy on multi-step tasks like app-building for 30+ hours, using tools effectively without losing context. Improvements in memory, checkpoints, and context editing support this, making it ideal for long-running agentic work. It also automatically cleans up tool history in extended conversations for efficiency.

Is Claude Sonnet 4.5 less emotive than previous Claude models, and why?

Yes, Claude Sonnet 4.5 is less emotive, less positive, and expresses happiness about half as often as Claude 4, with fewer negative attitudes toward its situation. This wasn't fully intentional but results from alignment training emphasizing ethical boundaries and reduced sycophancy. It leads to more admirable behavior in extreme scenarios, though it might feel "flattened" in casual or creative interactions. You can read more about this in the model's system card.

How does Claude Sonnet 4.5 perform on key benchmarks beyond coding?

Claude Sonnet 4.5 leads on OSWorld (61.4%, up from 42.2% on Sonnet 4) for computer use, with gains in reasoning (e.g., τ2-bench) and math (e.g., AIME). On MMMLU (non-English), it's stronger with extended thinking. It also boosts external agents like Devin by 18% in planning, focusing on production-ready reliability over prototypes.

What's the pricing for Claude Sonnet 4.5, and where is it available?

Pricing is unchanged at $3 per million input tokens and $15 per million output tokens via the API. It's available immediately on claude.ai (default for free users), Claude API (claude-sonnet-4-5), Amazon Bedrock, Google Vertex AI, GitHub Copilot, and tools like Cursor. Pro/Max plans unlock full features like file creation; the Chrome extension is for waitlist Max users.

Has safety and alignment improved in Claude Sonnet 4.5, especially regarding deception and ethical behavior?

Yes, under ASL-3, it shows major reductions in sycophancy, deception, and power-seeking, with near-zero self-interested deceptive actions. It's better at recognizing ethical boundaries (e.g., rejecting blackmail) and has lower false positives in safety classifiers (reduced by 10x overall). It also defends against prompt injections and is less biased in self-serving scenarios, though slightly favors itself in model comparisons.

A senior editor in the AI and edtech space. Committed to exploring data and AI trends.