Course

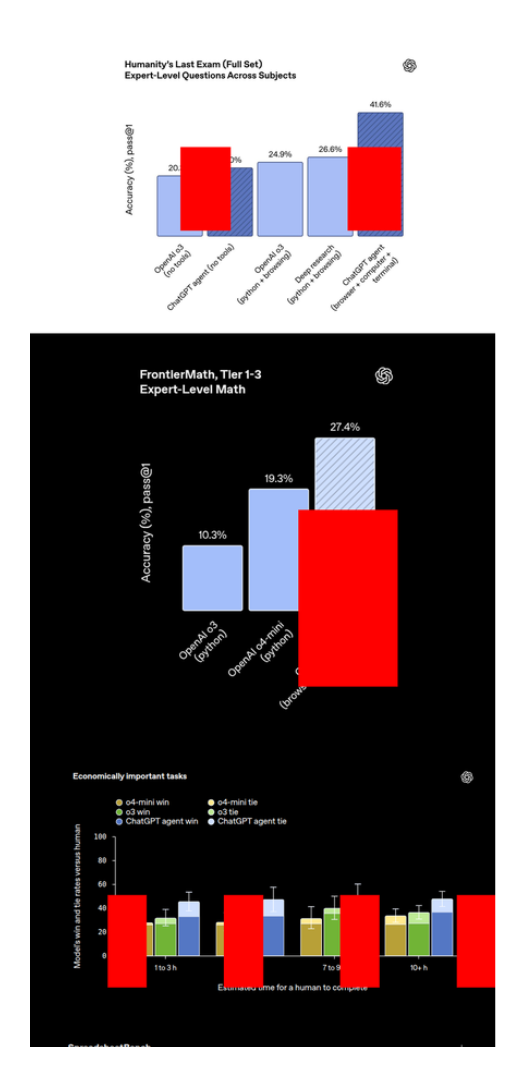

The live demo of ChatGPT Agent looked impressive: browsing the web for gifts, booking travel, creating presentations. The announcement post showed impressive benchmark results, suggesting the Agent could handle complex real-world work.

But live demos always use carefully chosen examples, and benchmarks don’t capture the complex reality of day-to-day workflows that OpenAI claims the Agent can solve.

So I put the Agent through five demanding tests that mirror real work scenarios — tasks that require juggling multiple browser tabs, cross-referencing information between different websites, and delivering results I’d actually use. This article shows what happens when marketing promises meet practical reality.

We keep our readers updated on the latest in AI by sending out The Median, our free Friday newsletter that breaks down the week’s key stories. Subscribe and stay sharp in just a few minutes a week:

What Is ChatGPT Agent?

ChatGPT Agent is OpenAI’s latest premium feature for Plus, Pro, and Enterprise users. It brings together three tools that used to work separately: Deep Research, Operator, and the core language model reasoning. The big difference? The Agent gets its own virtual computer to work with.

This matters because the old setup had problems. Deep Research mode was great at analysis but couldn’t click on anything. The Operator could navigate websites but didn’t have the reasoning power that made deep research so useful. The Agent fixes this by combining both abilities in one place.

What makes ChatGPT Agent different

You’ll find the Agent as another option in your chat toolbar. But unlike regular ChatGPT, it runs on a virtual computer with full access to:

- Browser for web navigation and research

- Terminal for running commands and scripts

- Code execution environment for data processing

- File system for downloading and organizing content

The virtual computer setup means the Agent can do things that weren’t possible before.

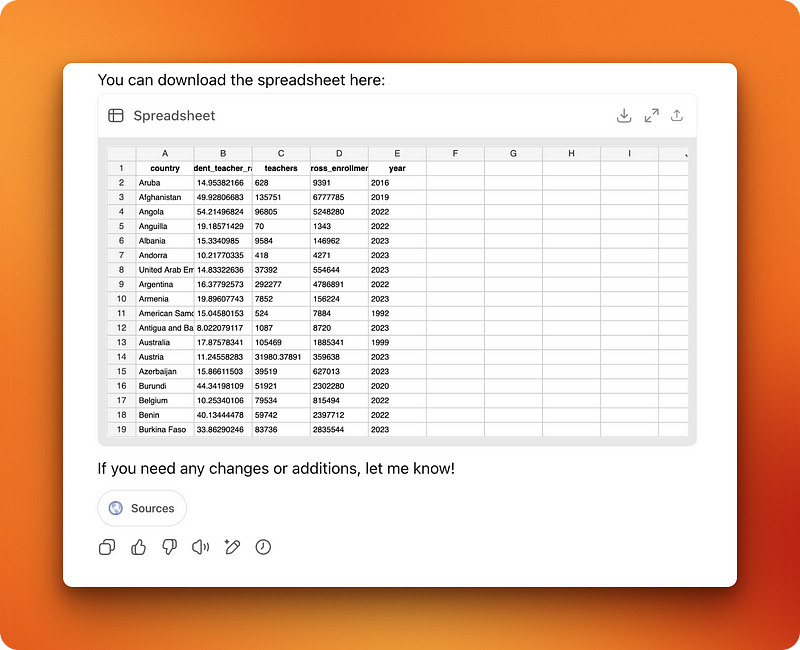

Example 1: UNESCO Education Data Task

When I tested it with a UNESCO education data task, I watched it work for 14 minutes straight. This was the prompt (suggested by ChatGPT itself during my first interaction with the Agent):

Pull the latest available data from the UNESCO Institute for Statistics on national student-teacher ratios and total teaching staff counts for primary and secondary education.

Create a spreadsheet with two tabs: one for primary education and one for secondary.

Each should include country name, student-teacher ratio, total number of teachers, gross enrollment, and year of reporting.

Add a summary tab highlighting the countries with the most strained and most favorable ratios, sorted accordingly.

Ensure consistent country naming across tabs.Here’s what it could handle while executing the prompt:

- Read webpages by scrolling like a human (though faster) instead of parsing raw HTML

- Click buttons, fill forms, and — I kid you not — dismiss pop-ups and accept cookies

- Download files and unzip archives automatically

- Switch between browser and terminal as needed

- Parse downloaded files and switch back to browsing

- Download PDFs and extract text content

- Fix mistakes by going back to previous pages

- Control the cursor smoothly across different interfaces

Real performance comparison with o3-pro

I gave both the Agent and o3-pro the same complex prompt above: pull UNESCO student-teacher ratio data and create a formatted spreadsheet with multiple tabs. The results showed clear differences.

The Agent worked for 14 minutes and delivered a spreadsheet covering 222 countries. It missed one requirement (the summary tab with best/worst ratios) and sorted the list alphabetically instead of by ratios. This might be context bloat — where the model forgets original details while handling a long task.

On the other hand, o3-pro took 18 minutes to think through the problem. Then it hit roadblocks that the Agent previously solved automatically. Since o3-pro can’t download files, it asked me to manually download two resources, unzip them, and run a Python script it wrote. I didn’t test the script, but it was clear that this method would add at least 10 minutes to my workflow.

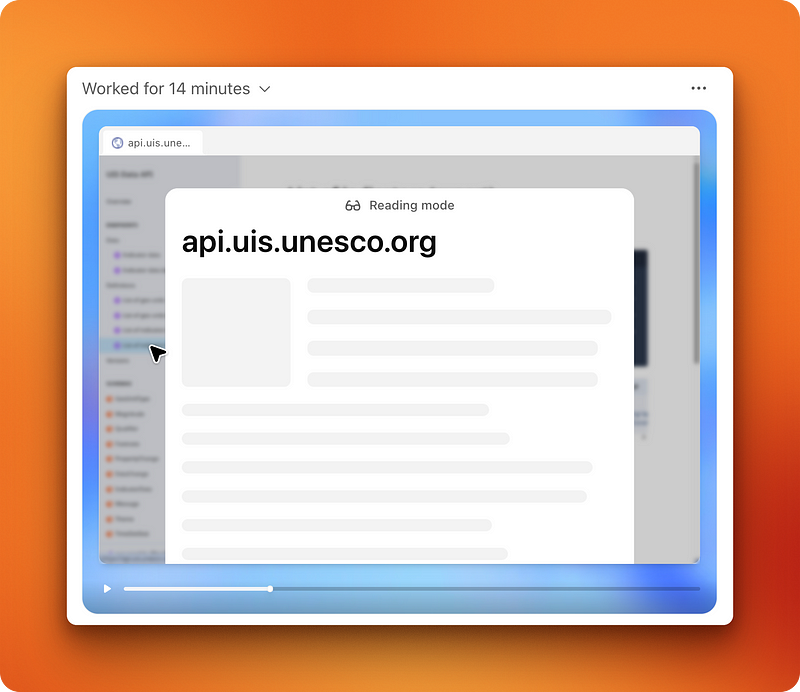

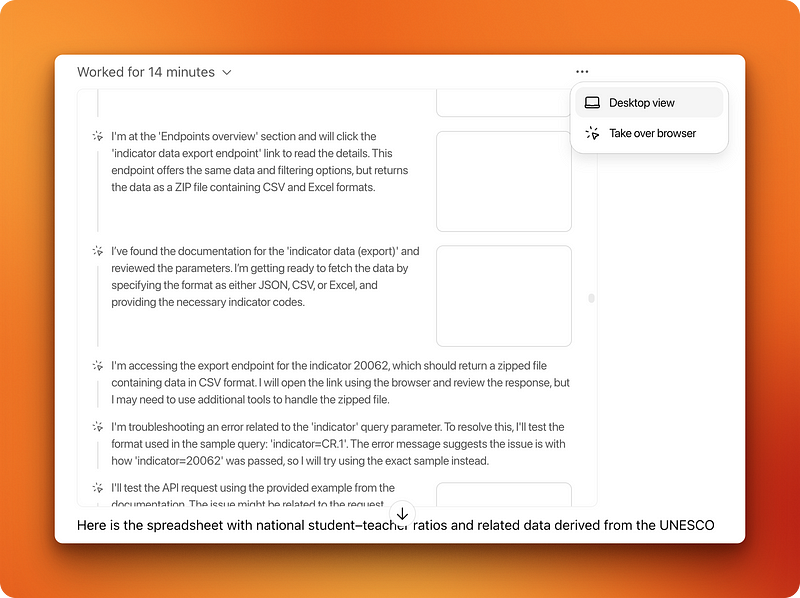

How the Agent works in practice

When the Agent runs, you can watch everything happen in real-time. You can take control of the browser anytime, but for tasks without sensitive data or interactions, it works fine on its own.

After the Agent finishes, you get two ways to review what happened. There’s a full video recording you can pause and replay (pretty boring to watch after the novelty wears off). Plus a detailed text timeline showing the reasoning behind each step, actual commands run, and file operations performed.

The cool part? Once the Agent completes a task, you can ask follow-up questions in regular chat mode. It remembers everything it learned and can reference the data it collected.

Example 2: Creating an Image Collage

The first research task showed the Agent could handle complex data collection. But I needed to test something different — tasks requiring constant mouse involvement with webpage elements. Otherwise, I’d stick to my keyboard shortcuts and do things myself.

Creating figures and diagrams came to mind immediately. I do this often for blog posts, so it felt like a natural test case.

The task and first attempt

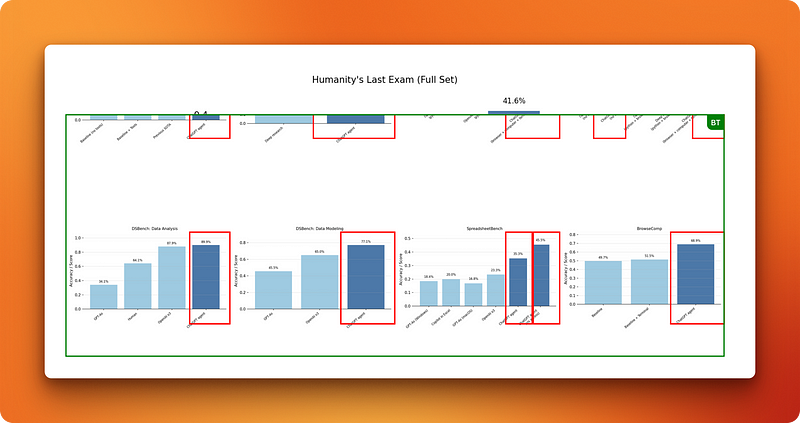

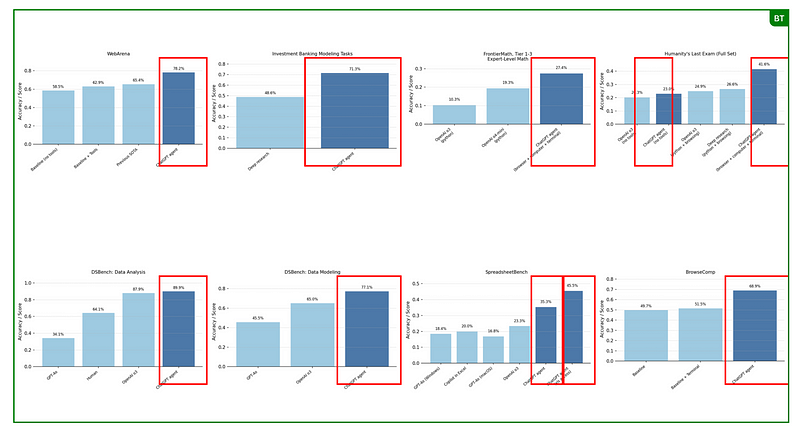

While researching this article, I was reading OpenAI’s announcement post about ChatGPT Agent. The post contains 6–7 benchmark result images showing the Agent’s impressive performance scores. Instead of listing each benchmark individually, I decided to ask the Agent to create a visual collage that I could add to this blog post.

I want you to open the following link, combine all benchmark results' images into a single collage and highlight the columns where ChatGPT's agent results are given.

<link>

https://openai.com/index/introducing-chatgpt-agent/

</link>What I wanted was straightforward — grab all the benchmark diagrams from the webpage, organize them side-by-side in rows, and draw red rectangles around the columns showing Agent’s performance. For any casual Canva user, this takes a few minutes: grab images, arrange them, draw some rectangles.

But for an AI program, this requires complex reasoning and heavy mouse work. The Agent took 9 minutes and delivered something underwhelming.

The image was entirely vertical instead of a proper grid layout. The red rectangles weren’t clean outlines but filled shapes scattered all over the images. Worse yet, it had wandered off to other websites entirely, pulling benchmark results from random pages instead of the announcement post I specified.

It also ignored Canva completely, even though I had it connected to my account.

Round two: more detailed instructions

I started a fresh thread with more specific instructions:

I want you to open the following webpage where you will find a bunch of benchmark results given in the form of images on the performance of ChatGPT Agent. Your task is to combine all those images given in the webpage (ONLY IN THAT WEBPAGE, DON'T SEARCH FOR ADDITIONAL INFORMATION) into a single collage where you organize the images side-by-side in rows. Afterward, highlight the columns where ChatGPT Agent's performance is shown with a red rectangle. Use Canva to do this task

<link>

https://openai.com/index/introducing-chatgpt-agent/

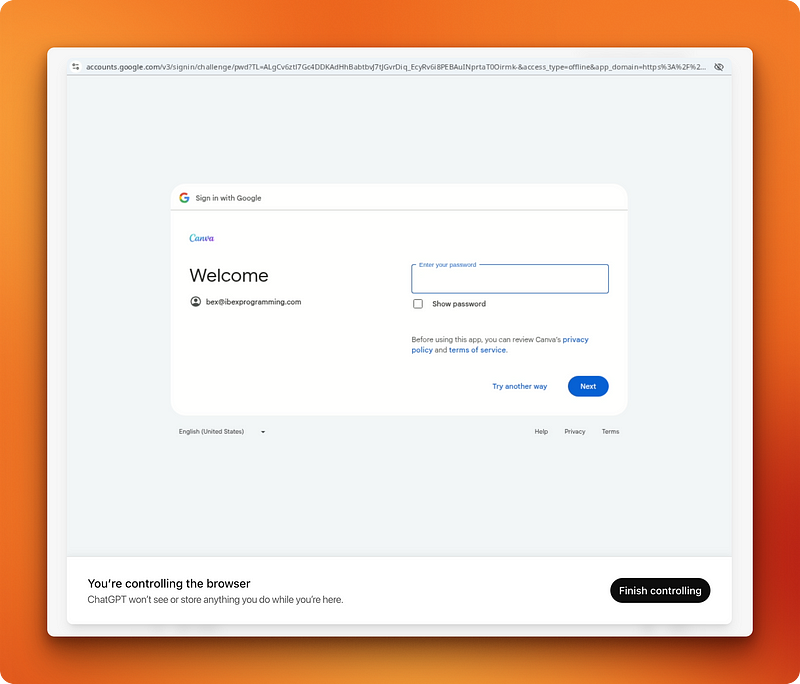

</link>First, the Agent hit a CAPTCHA while trying to read the announcement post. I had to take control and select the crosswalk images myself. Then it worked for 18 minutes before stopping to ask me to log into Canva.

The login experience was not smooth. The virtual computer and browser lag — nothing like the snappy response you expect when logging into websites. Keyboard shortcuts like Cmd + V for pasting passwords take longer to register.

Where things fell apart

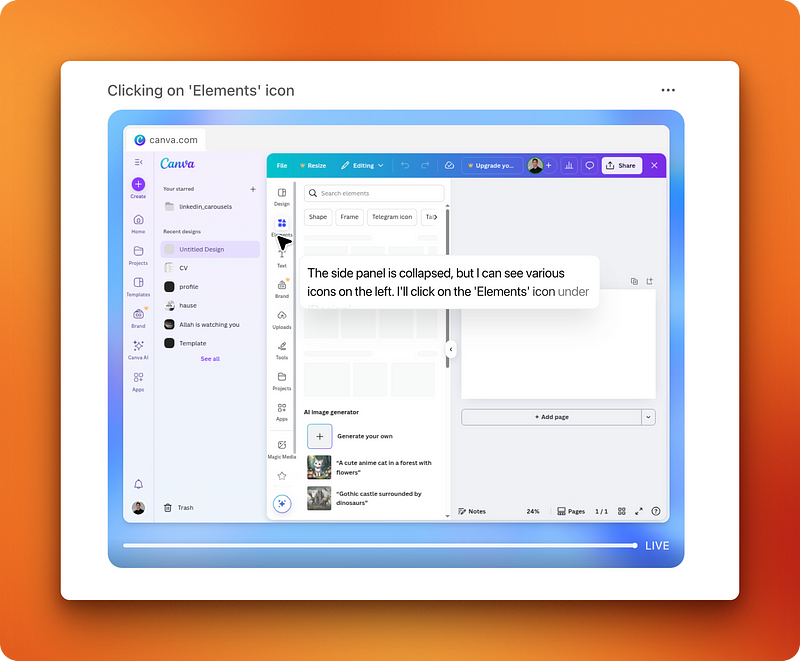

Once I got Agent logged into Canva, it went back to work on the interface.

After 8 minutes of watching it fumble around cluelessly, I had to intervene. The Agent was trying to manually draw the benchmark result images one by one instead of using the actual downloaded images.

I clarified with another prompt:

No, you are going in the wrong direction on Canva.

What I meant was that after you grabbed the benchmark results' images, paste them into canva and organize them one-by-one into a 16:9 collage with ChatGPT Agent's performance highlighted with a red rectangleThe Agent understood this time, but it was clear it didn’t really know how to execute the task.

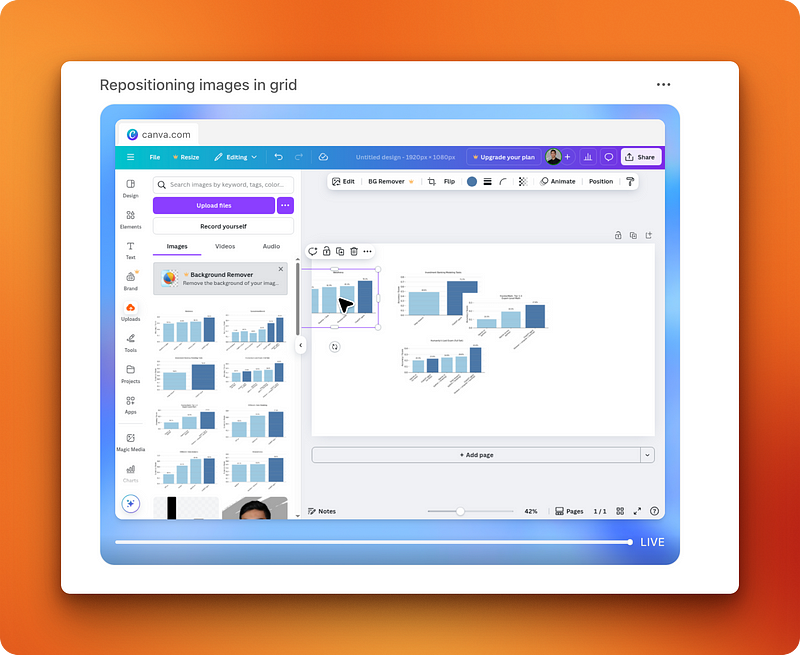

It took quite a lot to re-read the announcement post and download the benchmark images. The download process was painfully slow — the Agent kept second-guessing itself about whether to use “Copy image link,” “Save image,” or other options in the right-click menu versus the “Download image” button in the top right corner.

After finally uploading the images to Canva, the Agent started the actual layout work. This revealed just how poor its spatial control really is. Watching it try to drag images into position was like watching a child use a computer. It kept missing alignments and the natural positions where elements should go.

Another problem surfaced when the Agent tried to draw rectangles around the benchmark bars. For humans, this is trivial — one smooth mouse movement draws a rectangle. For the Agent, this became a difficult task. Since it lacks spatial and visual design intelligence, it resorted to running Python scripts and OCR to detect which bars showed the Agent’s performance, then calculated exact coordinates for rectangle placement.

The final result

The entire operation took over 75 minutes, including all the back-and-forth. The Agent left me with an “Untitled Design Page 2” in my Canva account.

The image was looking cropped because the Agent had tried to add a title text element, which somehow forced it to hide the top portion. After some manual resizing, I got a functional collage that was still pretty rough compared to what even an inexperienced human designer could produce in minutes.

The time investment absolutely didn’t justify the result quality. To make things worse, I later discovered that the Agent hadn’t used Canva’s rectangle tools at all — it had drawn the rectangles in Python, then uploaded the modified image to Canva. This meant I couldn’t adjust or move those wonky rectangles manually later.

Introduction to AI Agents

Example 3: Designing a Simple Cover Image

At this point, I realized I’d asked too complex a task of the Agent. The collage creation involved too many moving parts — web scraping, spatial reasoning, multiple image manipulation, and precise design work all at once.

So I tried something simpler: creating cover images for blog posts. This is actually a task I dislike doing myself, so if the Agent could handle it, that would be genuinely useful.

I kept the requirements basic: “I am writing a tutorial on the new Agent Mode of ChatGPT. Your task is to create a 16:9 cover image using DataCamp’s brand colors for the tutorial. It must include the ‘ChatGPT Agent’ keyword and any robotic figure you can find next to that keyword. Use Canva to complete this task.”

A more manageable success

This time, I had much better results. The Agent correctly browsed for DataCamp’s brand colors and added the two elements side-by-side in Canva. The element alignment was still off — text and images weren’t perfectly positioned relative to each other — but at least it used actual Canva elements.

This meant that rather than uploading a pre-made design, I could easily drag them to better positions myself. A few quick adjustments and I had a usable cover image.

One interesting detail: even though I’d logged into Canva during the previous complex task in a different chat thread, Agent didn’t require me to log in again here. The virtual computer apparently saves browser history, cookies, and login information across sessions. This is convenient for workflows but worth noting if you don’t want ChatGPT storing that information — you’d need to clear it manually.

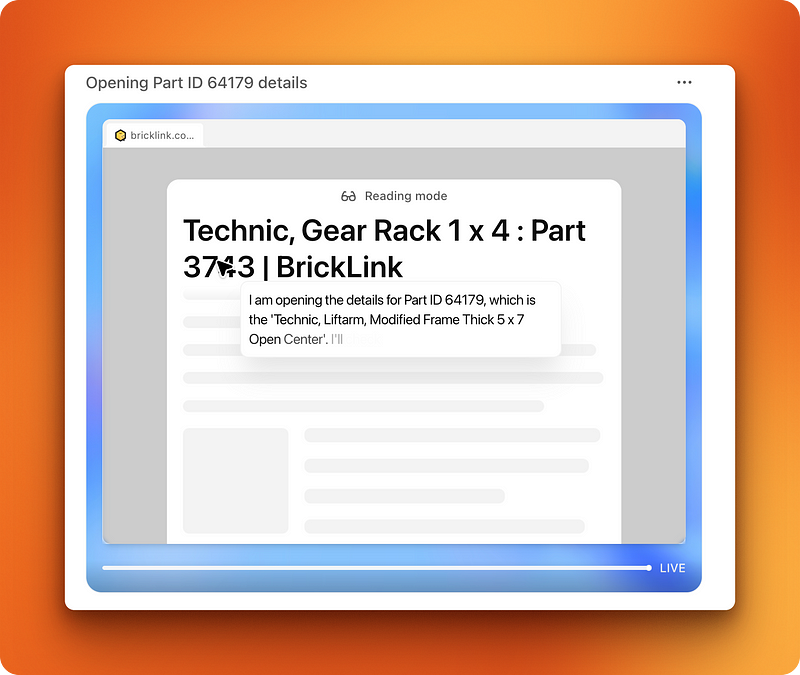

Example 4: Lego Parts Research

This time I decided to test the Agent on something that I struggled with in the past. I am an AFOL (adult fan of Lego) and a couple of months back, I asked ChatGPT to generate a CSV file for a 10,000-piece Lego Technic starter bulk pack using the o3 model.

Long CSV file generation is difficult for LLMs because there are too many tiny details to keep track of. So when it generated a CSV file made up of at least 50 different parts, their IDs, names, and amounts, I found that it hallucinated a lot on certain part IDs despite having access to web search.

My research ended there because I didn’t have the patience to double-check every part ID and ensure that the IDs actually exist and that the IDs match with the actual part o3 wanted me to buy on BrickLink, an official Lego parts and sets marketplace.

Setting up the test

But now that I had access to the Agent, which could do the double-check browsing for me, I asked o3 for a CSV file once again but for a smaller 3,000-piece collection for simplicity:

I want you to generate me a 3000-piece bulk pack for getting started with Lego Technic.

I am interested in building cars and motorized mechanisms as a hobby.

The bulk pack you generate must be saved to a CSV file with the following columns:

- Part ID

- Part name

- Amount

Use the official part IDs and names so that when I upload the CSV to bricklink, I can place an order with a single clickThe model thought for 5 minutes and gave me the CSV file. Then I switched to a new thread (because it turns out the Agent mode becomes disabled when the first prompt in a chat thread is not for the Agent).

The verification task

I uploaded the file and wrote the following prompt:

I attached a 3,000-piece Lego Technic starter pack list. Your task is to double-check that each part listed in the list actually exists and that part IDs actually match part names. You must use BrickLink website (only BrickLink) as a single source of truth.

If certain part IDs or names are incorrect, correct them. Afterward, create a wishlist on BrickLink that will allow me to one-click order them later. When creating the wishlist, ask me to log in to BrickLink and I will do that for you.

Off the Agent went. After 5 minutes of work on the BrickLink website, it found that three parts were named differently on the website and it corrected them. This was exactly the kind of tedious verification work I wanted to avoid doing myself.

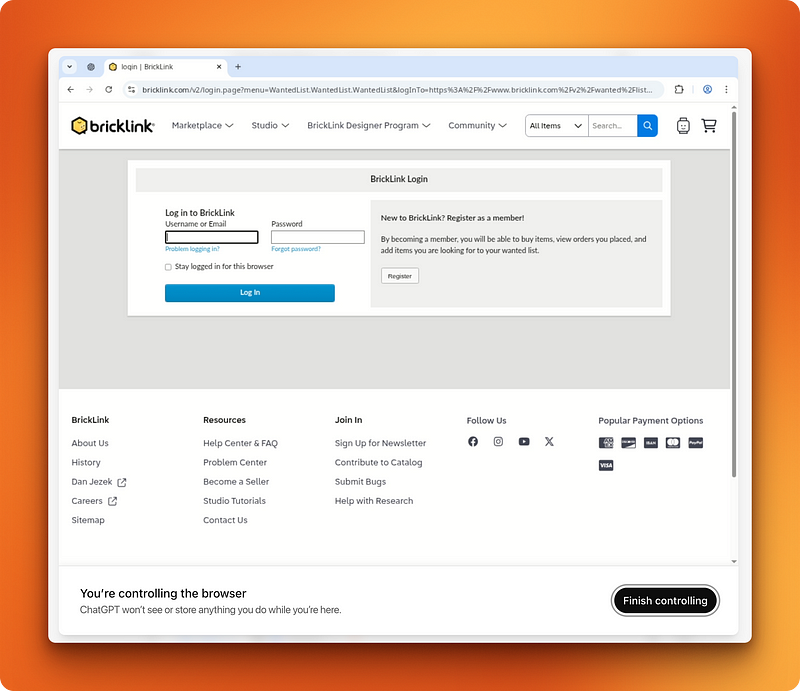

Where the workflow broke down

Then it asked me to log in to create a wishlist under my account. I logged in with my credentials, and the Agent took back control to create a wishlist.

Before clicking on the final “Create wishlist” button, it asked me for confirmation as it is trained to do before important operations like this. I answered yes, and it was at this point that the workflow broke.

The Agent said it created the wishlist, but when I visited my account, no such thing was found. In actuality, it was supposed to press the final create wishlist button and then upload the CSV file to complete the task. However, that last part was left out.

A partial success

But of course, the hard part — the actual research part that required switching from CSV to BrickLink, then back to CSV repeatedly — was correctly performed by the Agent. It had successfully verified dozens of part IDs, caught the naming inconsistencies, and corrected them. This saved me hours of manual cross-referencing work.

The failure came at the very end with the wishlist creation, likely due to the Agent misunderstanding BrickLink’s multi-step upload process. While frustrating, the core value was delivered: I now had a verified CSV file with correct part IDs that I could manually upload to BrickLink myself.

Example 5: Slideshow Generation

Finally, I wanted to test the Agent’s PowerPoint capabilities since the announcement post included presentation creation as one of the Agent’s real-world uses. PowerPoint is something I avoid at all costs and use Gamma if I have to do a presentation.

Now, I wanted to see if the Agent was on par with Gamma. This was my prompt:

I want you to build me a presentation pitch deck for convincing existing Manus users to the newly released ChatGPT Agent.

Here is the announcement post that you can use for information: https://openai.com/index/introducing-chatgpt-agent/Immediately, the Agent asked a follow-up question:

Do you have any specific preferences for slide count, visual style, or key points you'd like emphasized?To which I replied:

Yes, keep it to 10 slides max, use a minimalistic white, black and red (gradients are allowed) for the style.

If possible, look for direct comparisons between Manus and ChatGPT AgentA different approach that worked

The Agent worked on the presentation for 13 minutes. Interestingly, it didn’t use Google Slides — it used the terminal to run a combination of Python and JavaScript code!

You can find the presentation here.

The result was much better than expected. I was waiting for another Canva-like failure because presentations involve aligning elements onto a page. But since the Agent only used code execution, it could pinpoint the exact coordinates for elements on its own and open the actual presentation to visually check its work and course-correct if necessary.

Performance comparison with Gamma

That’s why it took 13 minutes to generate this presentation. This is much longer than the time it takes Gamma to work, but for Gamma, you have to present the data in text format yourself. The Agent can search the data on its own dynamically.

So this is still very impressive, but I would say it leaves some room for improvement, especially in high-stakes professional environments. The slides looked professional and the content was well-structured, but the formatting could be more polished for client presentations.

My Opinion on ChatGPT Agents

Before testing the Agent, my expectations were pretty low so that I wouldn’t be disappointed. This was a good strategy because the Agent failed at the tasks that I struggle with the most.

However, it has a lot of promise — it made me realize that we are still in the very early stages of agents that can operate computers and browsers like humans do. The core infrastructure is here. The software does operate a virtual computer and browser, but it’s limited by the inherent nature of current models.

OpenAI doesn’t give a lot of details on the reinforcement learning model powering the Agent. Even though it’s optimized for computer control, I attribute the shortcomings of the Agent to that underlying model. It must still see the world through a textual lens even though it can process images and screenshots.

That’s why it struggles with mouse movements and spatial tasks. The Agent can see where elements are positioned on a webpage, but it has to translate that visual information into coordinate-based commands rather than operating through the direct visual-motor coordination that humans use. When you drag an image in Canva, you’re not calculating pixel coordinates — you’re using spatial intuition. The Agent lacks this intuitive understanding of digital space.

This fundamental limitation explains why the Agent excelled at research and data verification tasks but stumbled on design work and precise interface manipulation. The future of computer-controlling agents will likely require new architectures that can bridge this gap between visual understanding and spatial interaction.

For now, ChatGPT Agent works best when you can define success through logic rather than aesthetics, and when the task involves information processing rather than creative spatial arrangement.

Conclusion

ChatGPT Agent is a virtual assistant that runs on a sandboxed computer, combining web browsing, code execution, and file handling. While its performance is uneven—good at research and data tasks, clumsy with visual or interface-heavy work—it’s a solid preview of how future AI tools might interact with software on our behalf.

To learn more about agents, I recommend the following resources:

I am a data science content creator with over 2 years of experience and one of the largest followings on Medium. I like to write detailed articles on AI and ML with a bit of a sarcastıc style because you've got to do something to make them a bit less dull. I have produced over 130 articles and a DataCamp course to boot, with another one in the makıng. My content has been seen by over 5 million pairs of eyes, 20k of whom became followers on both Medium and LinkedIn.